- #Apache lucene relevance models how to

- #Apache lucene relevance models pdf

- #Apache lucene relevance models series

Necessary for this step, and works quite a bit of magic under a surprisingly simple This conversion process is called indexing, and its output is called an index.ĭuring the indexing step, the document is added to the index. Here’s where indexing comes in: to search large amounts of text quickly, you must first index that text and convert it into a format that will let you search it rapidly, eliminating the slow sequential scanning process. Although this approach would work, it has a number of flaws, the most obvious of which is that it doesn’t scale to larger file sets or cases where files are very large. How would you go about writing a program to do this? A na?ve approach would be to sequentially scan each file for the given word or phrase. Suppose you need to search a large number of files, and you want to find files that contain a certain word or a phrase. It’s also straightforward to build your own analyzer, or create arbitrary analyzer chains combining Lucene’s tokenizers and token filters, to customize how tokens are created. Lucene provides an array of built-in analyzers that give you fine control over this process. There are all sorts of interesting questions here: how do you handle compound words? Should you apply spell correction (if your content itself has typos)? and so on.

#Apache lucene relevance models series

Each token corresponds roughly to a “word” in the language, and this step determines how the textual fields in the document are divided into a series of tokens.

This is what happens during the Analyze Document step. No search engine indexes text directly: rather, the text must be broken into a series of individual atomic elements called tokens.

But sometimes it’s less clear: how should you handle attachments on an email message? Should you glom together all text extracted from the attachments into a single document, or make separate documents, somehow linked back to the original email message, for each attachment.

#Apache lucene relevance models pdf

Often the approach is obvious: one email message becomes one document, or one PDF file or web page is one document.

#Apache lucene relevance models how to

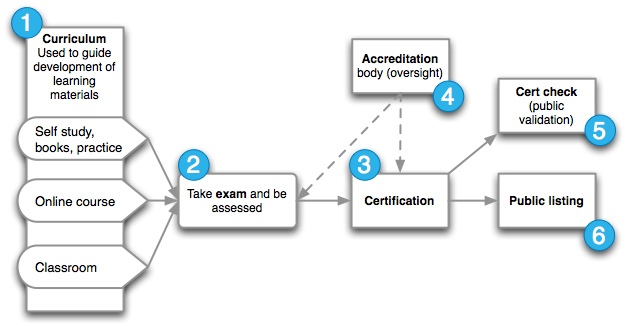

You’ll have to carefully design how to divide the raw content into documents and fields as well as how to compute the value for each of those fields. The document typically consists of several separately named fields with values, such as title, body, abstract, author, and url. Once you have the raw content that needs to be indexed, you must translate the content into the units (usually called documents) used by the search engine. Alternatively, it may be horribly complex and messy if the content is scattered in all sorts of places (file systems, content management systems, Microsoft Exchange, Lotus Domino, various websites, databases, local XML files, CGI scripts running on intranet servers, and so forth). That may be trivial, for example, if you’re indexing a set of XML files that resides in a specific directory in the file system or if all your content resides in a wellorganized database. This process, which involves using a crawler or spider, gathers and scopes the content that needs to be indexed. The first step, at the bottom of the above figure, is to acquire content. In the figure above, only the shaded components show are handled by Lucene. A common misconception is that Lucene is an entire search application, when in fact it’s simply the core indexing and searching component. It’s important to grasp the big picture so that you have a clear understanding of which parts Lucene can handle and which parts your application must separately handle.

0 kommentar(er)

0 kommentar(er)